The Evolution of the Militarized Data Broker

As the front of modern warfare slowly evolved from direct military action into weaponized financial speculation, the market for data became just as valuable as the defense budget itself.

Facebook, not unlike Palantir, was one of the vehicles used to privatize controversial U.S. military surveillance projects

While often mythologized as having been created to champion human freedom, the internet and many of its most popular companies were directly birthed out of the national security apparatus of the United States.

UNLIMITED HANGOUT, by Mark Goodwin, January 16, 2025

Today, the world’s economy no longer runs on oil, but data. Shortly after the advent of the microprocessor came the internet, unleashing an onslaught of data running on the coils of fiber optic cables beneath the oceans and satellites above the skies. While often posited as a liberator of humanity against the oppressors of nation-states that allows previously impossible interconnectivity and social organization between geographically separated cultures to circumnavigate the monopoly on violence of world governments, ironically, the internet itself was birthed out of the largest military empire of the modern world – the United States.

The ARPANET

Specifically, the internet began as ARPANET, a project of the Advanced Research Projects Agency (ARPA), which in 1972 became known as the Defense Advanced Research Projects Agency (DARPA), currently housed within the Department of Defense. ARPA was created by President Eisenhower in 1958 within the Office of the Secretary of Defense (OSD) in direct response to the U.S.’ greatest military rival, the USSR, successfully launching Sputnik, the first artificial satellite in Earth’s orbit with data broadcasting technology. While historically considered the birth of the Space Race, in reality, the formation of ARPA began the now-decades-long militarization of data brokers, quickly leading to world-changing developments in global positioning systems (GPS), the personal computer, networks of computational information processing (“time-sharing”), primordial artificial intelligence, and weaponized autonomous drone technology.

In October 1962, the recently-formed ARPA appointed J.C.R. Licklider, a former MIT professor and vice president of Bolt Beranek and Newman (known as BBN, currently owned by defense contractor Raytheon), to head their Information Processing Techniques Office (IPTO). At BBN, Licklider developed the earliest known ideas for a global computer network, publishing a series of memos in August 1962 that birthed his “Intergalactic Computer Network” concept. Six months after his appointment to ARPA, Licklider would distribute a memo to his IPTO colleagues – addressed to “Members and Affiliates of the Intergalactic Computer Network”– describing a “time-sharing network of computers” – building off a similar exploration of communal, distributed computation by John Forbes Nash, Jr. in his 1954 paper “Parallel Control” commissioned by defense contractor RAND – which would build the foundational concepts for ARPANET, the first implementation of today’s Internet.

Prior to the technological innovations explored by Licklider and his ARPA colleagues, data communication – at this time, mainly voice via telephone lines – were based on circuit switching, in which each telephone call would be manually connected by a switch operator to establish a dedicated, end-to-end analog electrical connection between the two parties. The RAND Corporation’s Paul Baran, and later ARPA itself, would begin to work on methods to allow formidable data communication in the event of a partial disconnection, such as from a nuclear event or other act of war, leading to a distributed network of unmanned nodes that would compartmentalize the desired information into smaller blocks of data – today referred to as packets – before routing them separately, only to be rejoined once received at the desired destination.

While certainly unbeknownst to the technologists at the time, this achievement of both distributed routing and global information settlement via data packets created an entirely new commodity – digital data.

A Brief History of Weaponized Financial Intelligence

Long before the USSR spooked the United States into formalizing ARPA due to fears of militarized satellite applications post-Sputnik launch, data brokers have played a significant role in warfare and specifically the markets surrounding military conflict……………………………………………….

As the front of modern warfare slowly evolved from direct military action into weaponized financial speculation, the market for data became just as valuable as the defense budget itself. It is for this reason that the necessity of sound data emerged as the foremost issue of national security, leading to a proliferation of advanced data brokers coming out of DARPA and the intelligence community, akin to the 21st century’s Manhattan Project.

The San Jose Project: Google, Facebook, and PayPal

Exemplified by the creation of the CIA’s venture firm, In-Q-Tel, and the proliferation of Silicon Valley-based venture firms coalescing on Sand Hill Road in Palo Alto, CA, the financialization of a new crop of American data brokers was complete. The first firm to grace Sand Hill Road was Kleiner Perkins Caufield & Byers, better known as KPCB, which participated in funding internet pioneers Amazon, AOL, and Compaq, while also directly seeding Netscape and Google. KPCB partners have included such government stalwarts as former Vice President Al Gore, former Secretary of State Colin Powell, and Ted Schlein – the latter being a board member of In-Q-Tel and member of the NSA’s advisory board. KPCB also had an intimate connection with internet networking pioneer Sun Microsystems, best known for building out the majority of network switches and other infrastructure needed for a modern broadband economy.

……………………… Perhaps the world’s most famous data broker, Google, whose founders both came out of Stanford University, was seeded by former Sun Microsystems founder Andy Bechtolsheim and his partner at the Ethernet switching company Granite Systems (later acquired by Cisco), David Cheriton, with Google’s most iconic CEO, Eric Schmidt, being the former CTO of Sun Microsystems.

The emergence of Silicon Valley out of the academic circuit in Northern California was no accident, and in fact was directly influenced by an unclassified program known as the Massive Digital Data Systems (MDDS) project. The MDDS was created with direct participation from the CIA, NSA, and DARPA itself within the computer science programs at Stanford and CalTech, alongside MIT, Harvard and Carnegie Mellon……………… over a few years, more than a dozen grants of several million dollars each were distributed via the NSF (the National Science Foundation) in order to capture the most promising efforts, ensuring that those efforts would become intellectual property controlled by the United States regulatory regime.

……………………………………………….The first unclassified briefing for scientists was titled “birds of a feather briefing” and was formalized during a 1995 conference in San Jose, CA, which was titled the “Birds of a Feather Session on the Intelligence Community Initiative in Massive Digital Data Systems.” That same year, one of the first MDDS grants was awarded to Stanford University, which was already a decade deep in working with NSF and DARPA grants. The primary objective of this grant was to “query optimization of very complex queries,” with a closely-followed second grant that aimed to build a massive digital library on the internet. These two grants funded research by then-Stanford graduate students and future Google cofounders, Sergey Brin and Larry Page. Two intelligence-community managers regularly met with Brin while he was still at Stanford and completing the research that would lead to the incorporation of Google, all paid for by grants provided by the NSA and CIA via MDDS.

…………………………………………………………………………………………….It was also during these formative years that the PayPal team worked closely with the intelligence community. …………………………………………………………………..In 2003, a year after PayPal was sold to eBay, Thiel approached Alex Karp, a fellow alumnus of Stanford with a new venture concept: “Why not use Igor to track terrorist networks through their financial transactions?” Thiel took funds from the PayPal sale to seed the company, and after a few years of pitching investors, the newly-formed Palantir received an estimated $2 million investment from the CIA’s venture capital firm, In-Q-Tel.

………………………………..As of 2013, Palantir’s client list included “the CIA, the FBI, the NSA, the Centre for Disease Control, the Marine Corps, the Air Force, Special Operations Command, West Point and the IRS” with around “50% of its business” coming from public sector contracts…………… As The Guardian reports: “Palantir does not just provide the Pentagon with a machine for global surveillance and the data-efficient fighting of war, it runs Wall Street, too.”

Facebook, not unlike Palantir, was one of the vehicles used to privatize controversial U.S. military surveillance projects after 9/11, having also been birthed out of one of the MDDS partners, Harvard University. PayPal and Palantir co-founder Peter Thiel became Facebook’s first significant investor at the behest of file-sharing pioneer Sean Parker, whose first contact with the CIA took place at age 16. ………………………… Facebook’s long-standing ties to the military and intelligence communities go far beyond its origins, including revelations about its collaboration with spy agencies as part of the Snowden leaks and its role in influence operations – some have even directly involved Google and Palantir.

The Military Origins of Facebook

Facebook’s growing role in the ever-expanding surveillance and “pre-crime” apparatus of the national security state demands new scrutiny of the company’s origins and its products as they relate to a former, controversial DARPA-run surveillance program that was essentially analogous to what is currently the world’s largest social network.

An unspoken outcome of the global proliferation of Facebook was the sly, roundabout creation of the first digital ID system – a necessity for the coming digital economy. Users would set up their profiles by feeding the social network with a plethora of personal information, with Facebook being able to use this data to generate large webs of connectivity between otherwise unknown social groups. There is even evidence that Facebook generated placeholder accounts for individuals that appeared in user data but did not have a profile of their own. Both Google and PayPal would also use similar digital identification methods to allow users to sign into other websites, creating interoperable identification systems that could permeate the internet.

A similar evolution is occurring in the financial sector, as data broker social networks – including Facebook and Musk’s X (formerly Twitter) – are posturing themselves as the future of financial service companies. ……………………………

From Public-Private, to Private-Public

As outlined above, it is clear that the public sector’s intelligence community used the veil of the private sector to establish financial incentives and commercial applications to build out the modern data economy. A simple glance at the seven largest stocks in the American economy demonstrate this concept, with Meta (Facebook), Alphabet (Google), and Amazon – with founder Jeff Bezos being the grandson of ARPA founder Lawrence Preston Gise – leading the software side, and Microsoft, Apple, NVIDIA and Tesla leading the hardware component. While many of these companies have egregious ties to the intelligence community and the public sector during their incubation, now these private sector companies are driving the globalization and national security interests of the public sector.

The future of the American data economy is firmly situated between two pillars – artificial intelligence and blockchain technology. With the incoming Trump administration’s close advisory ties to PayPal, Tether, Facebook, Palantir, Tesla and SpaceX, it is clear that the data brokers have returned to roost at Pennsylvania Avenue. AI requires massive amounts of sound data to be of any use for the technologists, and the data provided by these private sector stalwarts is poised to feed their learning modules – surely after securing hefty government contracts. Private companies using public blockchains to issue their tokens generates not only significant opportunities for the United States to address its debt problem, but simultaneously serves as a “boon in surveillance”, as stated by a former CIA director.

Trump Embraces the “Bitcoin-Dollar”, Stablecoins to Entrench US Financial Hegemony

Trump’s recent speech on bitcoin and crypto embraced policies that will seek to mold bitcoin into an enabler of irresponsible fiscal policy and will employ programmable, surveillable stablecoins to expand and entrench dollar dominance.

Within the Trump administration’s embracing of the blockchain – itself the final iteration of the public-private commercialization of data, despite its libertarian posturing – reveals the culmination of a decades-long technocratic dialectic trojan horse. Nearly all of the foundational technology needed to push the world into this new financial system was cultivated in the shadows by the military and intelligence community of the world’s largest empire. While technology can surely offer solutions for greater efficiency and economic prosperity, the very same tools can also be used to further enslave the citizens of the world.

What once appeared as a guiding light beckoning us towards free speech and financial freedom has revealed itself to be nothing but the shine of Uncle Sam’s boot making its next step. https://unlimitedhangout.com/2025/01/investigative-reports/the-evolution-of-the-militarized-data-broker/

Nuclear Waste: The Dark Side of the Microreactor Boom

By Haley Zaremba – Jan 15, 2025, https://oilprice.com/Alternative-Energy/Nuclear-Power/Nuclear-Waste-The-Dark-Side-of-the-Microreactor-Boom.html

The nuclear energy sector is experiencing a revival, driven by factors such as increased energy demand and support from governments and tech companies.

Microreactors, a new form of nuclear technology, are being touted for their lower costs and smaller size, but they produce a significantly higher volume of nuclear waste.

Despite concerns about nuclear waste, the development and deployment of microreactors continue to gain momentum, driven in part by the growing energy needs of AI.

Nuclear energy is ready for its close-up. After decades of steep decline in the sector and relatively high levels of public mistrust for the controversial technology, the tides are turning in favor of a nuclear energy renaissance. The public memory of disasters like Fukushima, Three Mile Island, and Chernobyl is fading, and the benefits of nuclear – a zero-carbon, baseload energy source – are getting harder to ignore as deadlines for climate commitments grow closer and energy demand ticks ever higher. But the future of the nuclear energy sector will look a bit different than its last boom time, from technological advances to the makeup of its biggest backers.

In Russia and Asia, nuclear energy has stayed popular, but in the West, nuclear had almost entirely fallen out of favor up until the last few years. In the United States, the Biden administration helped to build momentum for a nuclear comeback through its flagship Inflation Reduction Act, which included tax breaks and other incentives for various nodes of the nuclear sector. Over in Europe, nuclear advocates are trying to push through policy supporting nuclear power as Europe reconfigures its energy landscape to contend with energy sanctions on Russia. Public opinion in the West is also shifting in favor of nuclear power. As of 2023, a Gallup poll showed that support for nuclear energy in the United States was at a 10-year high.

Some of the biggest proponents of the nuclear energy renaissance are big tech bigwigs, who point to the power source as a critical solution to feed the runaway power demand of Artificial Intelligence. In fact, the growth trend of data centers’ energy demand is so extreme that it will soon outstrip the United States’ production potential if nuclear energy – and a host of other low-carbon solutions – are not utilized, and soon. Tech bigwigs, therefore, have good reason to back nuclear energy – oh, and they also just so happen to be behind a rash of nuclear energy startups.

But the new kind of nuclear that these companies are trying to bring onto the scene will not be the same as the nuclear technologies that had so solidly fallen out of favor over the last few decades. Traditional nuclear energy has a number of drawbacks, most notably its extremely high up-front costs and the additional costly burden of storing hazardous nuclear waste. New nuclear advocates want to confront the former challenge by rolling out much smaller versions of nuclear reactors, which can essentially be mass-produced and then installed on site for much lower development costs.

Currently, the industry is undergoing a competitive race to corner the market on nuclear microreactors, which are about the size of a shipping container and function somewhat like a giant battery pack. “Microreactors have the ability to provide clean energy and have passive safety features, which decrease the risk of radioactive releases,” Euro News recently reported. “They are also much cheaper than bigger plants as they are factory-built and then installed where they are needed in modules.”

These microreactors can be used in a huge range of applications and do not require any on-site workers for their operation and maintenance. Instead, they can be operated remotely and autonomously. As a result, they have much lower overhead costs as well as lower up-front costs. So what’s the downside?

Well, it’s a big one. Scientists have found that, contrary to what nuclear advocates have touted, small nuclear reactors produce extremely high levels of nuclear waste, and could even be worse for the planet than their full-sized predecessors. “Our results show that most small modular reactor designs will actually increase the volume of nuclear waste in need of management and disposal, by factors of 2 to 30,” said Stanford study lead author Lindsay Krall. “These findings stand in sharp contrast to the cost and waste reduction benefits that advocates have claimed for advanced nuclear technologies.”Some members of the scientific community have taken notice: “Say no to small modular reactors,” blasted a recent headline from the Bulletin of Atomic Scientists.

However, the voices decrying the rollout of small- and microreactors seem to be in the minority, as the Silicon Valley-backed industry barrels full speed ahead. Countries across Europe have jumped into the race as well, and its high levels of momentum – fuelled by the seemingly unstoppable expansion of AI – are unlikely to be impeded by the scientists yelling doomsday warning, however well-founded, from the sidelines.

America’s ‘zombie’ nuclear reactors to be revived to power Trump golden age

By ELLYN LAPOINTE FOR DAILYMAIL.COM, 24 January 2025 https://www.dailymail.co.uk/sciencetech/article-14317459/zombie-nuclear-reactors-revived-ai-demand-trump-stargate-south-carolina.html

A defunct nuclear power plant will be revived to power Donald Trump‘s new half-trillion-dollar project to make America the world’s artificial intelligence powerhouse.

The state-owned utility Santee Cooper — the largest power provider in South Carolina — said Wednesday that it is seeking buyers to complete construction on a partially-built project that was abandoned in 2017.

The VC Summer Nuclear Power Station, which houses two unfinished nuclear reactors, was scrapped following years of lengthy, costly delays and bankruptcy by its contractor, according to a company statement.

But now, the utility is hoping tech giants such as Amazon and Microsoft will be willing to finish the project, as they are seeking clean energy sources to fuel data centers for AI.

‘We are seeing renewed interest in nuclear energy, fueled by advanced manufacturing investments, AI-driven data center demand, and the tech industry’s zero-carbon targets,’ said Santee Cooper President and CEO Jimmy Staton.

This announcement came as President Donald Trump unveiled a $500bn AI project which he says will jumpstart America’s ‘golden age.’

The project, dubbed the ‘Stargate Initiative,’ is a massive private sector deal to expand the nation’s AI infrastructure, led by Big Tech companies such as OpenAI, SoftBank and Oracle. It is the largest AI infrastructure project in history.

Trump stated that Stargate will create over 100,000 new jobs ‘almost immediately.’

‘This monumental undertaking is a resounding declaration of confidence in America’s potential under a new president,’ he said during a Tuesday briefing.

Trump emphasized that the project aims to sharpen the country’s technological edge against competitors, notably China.

He held the briefing in the White House’s Roosevelt Room alongside SoftBank CEO Masayoshi Son, Oracle’s Larry Ellison and OpenAI’s Sam Altman.

The US AI industry has already grown rapidly in recent years, but one of the biggest hurdles to expansion is the energy cost of running data centers.

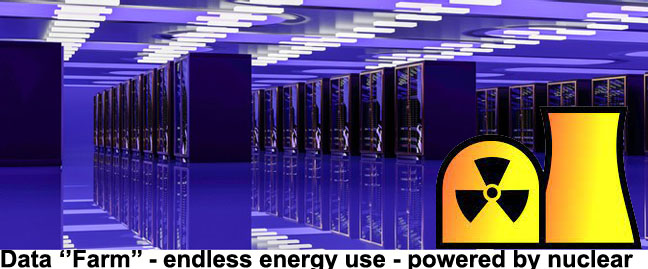

A recent Department of Energy (DOE) report found that total data center electricity usage more than tripled from from 2014 to 2023, rising from 58 TWh to 176 TWh.

The DOE estimates that by 2028, data center energy demand will increase between 325 to 580, consuming up to 12 percent of US electricity.

‘This monumental undertaking is a resounding declaration of confidence in America’s potential under a new president,’ he said during a Tuesday briefing.

Trump emphasized that the project aims to sharpen the country’s technological edge against competitors, notably China.

He held the briefing in the White House’s Roosevelt Room alongside SoftBank CEO Masayoshi Son, Oracle’s Larry Ellison and OpenAI’s Sam Altman.

The US AI industry has already grown rapidly in recent years, but one of the biggest hurdles to expansion is the energy cost of running data centers.

A recent Department of Energy (DOE) report found that total data center electricity usage more than tripled from from 2014 to 2023, rising from 58 TWh to 176 TWh.

The DOE estimates that by 2028, data center energy demand will increase between 325 to 580, consuming up to 12 percent of US electricity.

Santee Cooper said it was working with the investment firm Centerview Partners LLC to vet buyer proposals, which they will accept until May 5.

The exact asking price has not been publicly named, but the Wall Street Journal reported that completion of the reactors would cost the buyer billions of dollars over several years.

This would not be the first time that Big Tech bankrolled a nuclear energy project. Last September, Microsoft struck a deal with the New York utility Constellation Energy to restart the Three Mile Island nuclear plant in Pennsylvania.

This plant was the site of the worst nuclear power accident in US history, when its Unit 2 reactor partially melted down in 1979 and released radioactive gases and iodine into the environment.

Amazon, Meta and Google also sought or signed deals to back nuclear energy projects in 2024, similarly motivated by their AI endeavors.

The federal government has also shown support for the resurgence of nuclear power.

In September, the DOE finalized a $1.52 billion loan guarantee to help Holtec International, a New Jersey manufacturing company, recommission the Palisades nuclear plant in Michigan, marking the first-ever revival of a nuclear power plant in the US.

The Biden administration and Congress also offered billions of dollars in subsidies to maintain older nuclear plants and fund the construction of new reactors.

President Trump has largely opposed and sought to repeal the former president’s energy and climate policies, but has said he supports nuclear energy.

In its first actions this week, the new administration signed an executive order directing the heads of ‘all agencies’ to identify regulations that ‘impose an undue burden’ on domestic energy resources, including nuclear power.

It also instructs the US Geological Survey ‘to consider updating the Survey’s list of critical minerals, including for the potential of including uranium,’ which can be refined into nuclear fuel.

Nuclear fusion: it’s time for a reality check

Significant obstacles lie ahead in the quest for commercially viable nuclear fusion, writes Luca Garzotti, https://www.theguardian.com/science/2025/jan/22/nuclear-fusion-its-time-for-a-reality-check

I can’t help thinking Ed Miliband has not been accurately briefed when he says a government funding pledge means Britain is within “grasping distance” of “secure, clean, unlimited energy” from nuclear fusion (Ministers pledge record £410m to support UK nuclear fusion energy, 16 January).

Before we start talking about nuclear fusion via magnetic confinement as a commercially viable source of energy, five main challenges have to be met by the scientific community, each one of them a potential showstopper. We have to demonstrate:

1) That we can run a burning plasma for hours (if not in steady state) with Q=40 (Q being the ratio between power coming from the fusion reactions and power used to heat the plasma) without disruptions. If all goes well, at some point in the future, the ITER fusion project your article mentions will run a burning plasma with Q=10 for about 10 minutes.

2) That we can handle and exhaust the heat escaping from such a plasma and impinging on the first wall of the confining device.

3) That we can breed in the blanket of a power plant more tritium than we burn in the plasma. (Tritium is not readily available in nature and must be produced.)

4) That the materials used to build such a plant can withstand the neutron fluence coming from the burning plasma without losing their structural properties and without becoming excessively radioactive.

5) That a fusion reactor can be operated reliably and maintained by remote handling, minimising the downtime needed for maintenance.

These are massive scientific and technological challenges, the solution of which (despite progress being made) is not in the near future. The reward for finding a solution will be immense and therefore research must continue with humility and tenacity, but there is no room for overoptimistic or triumphalist statements, which can only undermine the credibility of the scientists and engineers working on the problem.

Operation Stargate, the project to make AI an “essential infrastructure” .

Koohan Paik-Mander. 22 Jan 25

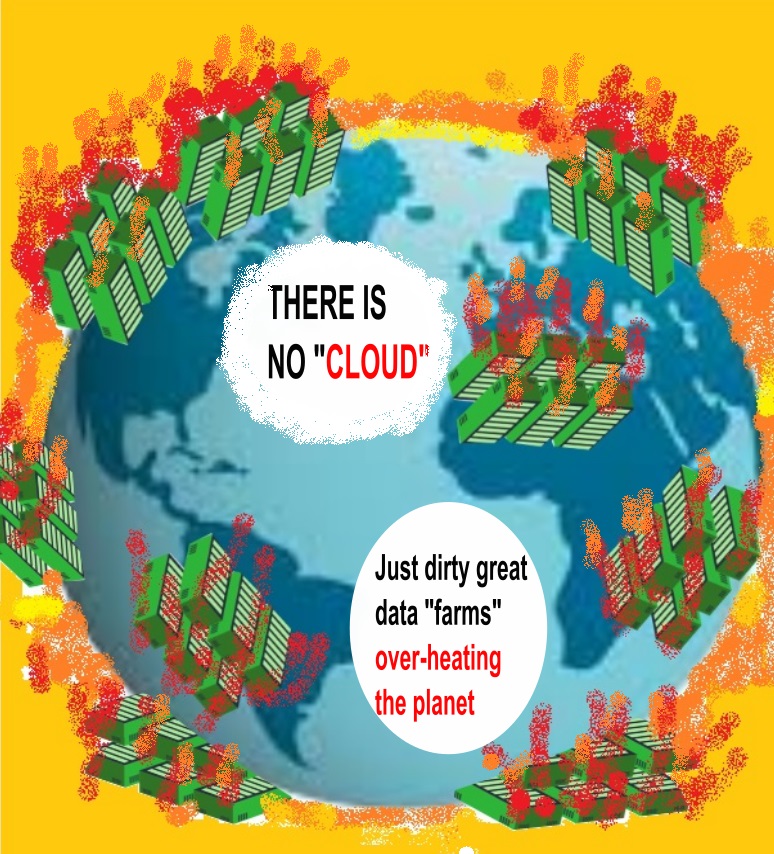

Each data center is filled from ceiling to floor with stacks of metal boxes — computers which process all the AI calculations, which are in the billions per second, and which cause the machines to heat up. To cool them, giant pipes filled with water snake through the basements of these buildings with capillaries of cooling liquid that branch off up to run alongside each of the machines. The water consumption for cooling data centers is enormous.

Essentially, Elon Musk is rejiggering all of America’s society and economy to recalibrate itself around AI. We are expected to give up our land, water and dreams of a livable climate in order to make the data centers operational.

I fear we will very soon be seeing a pivot from endless war on other countries to a focused crushing of the American people, under the banner of Operation Stargate, the project to make AI an “essential infrastructure,” like water or electricity. How that looks on the ground will be enormous data centers that are a half-million square feet each (the size of 2-1/2 Walmart Superstores or 8.5 football fields) constructed in clusters all over the nation. Wherever they are built, all nature perishes, because it is a wholesale smothering of the earth with concrete. They are now building ten of these data centers in Texas, as the first phase of this scourge on the American people. Each one of the ten uses between 20 to upwards of 100 megawatts of power. The entire island of Hawaii, where I live, uses 180 megawatts of energy. This is why they pulled the U.S. out of the Paris Accord, which would be an obstruction to the central plan of this administration to entrench AI infrastructure — arguably more central than deportations or building a wall.

They are proposing using federal lands as well, such as national parks, for these data centers. The first $500 billion was committed to their construction at the first Trump press conference. At the press conference, they didn’t mention any of the above. They just talked about how AI was going to cure cancer. It reminded me of the U.S. general telling the Bikini islanders that the atomic tests were to be “for the good of mankind.”

Each data center is filled from ceiling to floor with stacks of metal boxes — computers which process all the AI calculations, which are in the billions per second, and which cause the machines to heat up. To cool them, giant pipes filled with water snake through the basements of these buildings with capillaries of cooling liquid that branch off up to run alongside each of the machines. The water consumption for cooling data centers is enormous.

They want to cover the continent with these data centers, much like the initiative to cover it with interstate highways. But I don’t see it like that. For me, it is like watching the tracks being lain that would guide train-cars full of Jews and other “undesirables” to the incinerators at Auschwitz and Dachau. It is like watching the gureombi at Gangjeong be blasted, only to be paved over to build a navy base. It is like watching the limestone forest on Guam be razed to construct the live-fire training range. It is like watching the farming villages at Pyeongtaek protest the construction of one of the largest U.S. bases in the world… So I’m used to watching the horror of autocracy smashing nature and community. Only difference is, now it’s in my own country.

“This land is your land, this land is my land.” We used to sing that song in grade school — remember?

Operation Stargate is building not only the hardware of this infrastructure; it is building the software as well. It seeks to fully automate government. It is a libertarian’s wet dream. Its planners in Silicon Valley saw their wealth balloon during the pandemic when everyone went online. The idea behind a fully automated civilization is to revive that scale of profit acceleration for Silicon Valley, by getting society online as much as possible. Remember how every meeting, every lesson, every funeral, every yoga lesson — everything — was done online? They want that back again, but with many added AI “bots”, and what better place to start than government? Just as all the schools were online during the pandemic, the plan is for all of government to be online. Soon, trying to get assistance from City Hall will be as challenging as talking to a person at Yahoo. Maybe they’ll farm out the humans who answer our phone calls to our virtual City Hall with a bunch of underpaid workers in the Philippines or India.

But full automation is not truly human-free. AI requires a constant stream of data to train it. Slave wage workers will be hired in Africa to “annotate”; that is, to sit at computers and click meaningless boxes to train the AI models. The American people will also play a role in training. We’ll be surrounded by a smart grid and smart meters, smart appliances, smart cars, smart air fryers, smart homes — everything “smart”, which really means connected to sensors that record our voices, our images, our behavior patterns and any shifts in the environment. Those recordings provide more data streams to feed AI. Did you know that the reason they no longer manufacture stick shifts is because the sensors can’t translate manual transmission into usable data?

Data is considered more valuable than money these days. In fact, the U.S. government pays for satellite rental (most likely to Musk’s Starlink) in data from surveillance.

In the AI world, the word “surveillance” refers not only to cameras and microphones, but any of the ways these sensors are extracting data. Interaction with government agencies will be more opportunities to collect our data. Every interaction will be surveillance. You see, an AI infrastructure cannot exist without a surveillance infrastructure, a surveillance state.

The most egregious proposal is called Medshield, which was proposed in Congress last term but is certain to return, because it would be a means for the much needed data extraction. It proposes to transform the Department of Health and Human Services into a biowarfare hub. Combined with the “AI first, regulation last” mentality of the incoming administration, it would amount to a full-spectrum assault on Nature and human rights.

Introduced by Sen. Mike Rounds (R-South Dakota), the bill came off the drawing board at the Special Competitive Studies Project (SCSP), a think tank founded by former Google CEO Eric Schmidt.

The bill’s stated purpose was to require a pandemic preparedness and response program. Couched in the euphemism “surveillance,” the bill’s passage would ultimately mandate the continual extraction of DNA samples from hundreds of millions of Americans in order to train AI models, ostensibly to track and monitor biological attacks, and to create antidotes for them. There has been some talk about nationalistically spinning this as “patriotism,” as if it were the postwar Victory Garden movement. But nothing in the bill’s text hints at the egregious Constitutional violations to privacy and individual agency that this would pose.

Nor does the Act explicate that, as a general rule, all supposedly “defensive” weapons can be inversely deployed for offense. Building an arsenal of millions of new synthetic life forms to defend against a biological weapons attack has the potential, if not the covert intent, to irreversibly unleash new viruses, bacteria, proteins and other organisms into the ecological systems. Case in point: we saw how the “defensive” development of the atom bomb played out.

The MedShield Act would employ technology that works with large-language model AI much in the same way that ChatGPT operates. The large-language model is comprised of a maze of networks with billions of artificial neurons. It is trained by inputting hundreds of millions, perhaps billions, of relevant data points. (This is why a surveillance state is essential for any nation wishing global AI dominance — to continually feed the AI’s hungry maw.) Once trained by the data set to recognize patterns and relationships, a query can be entered into the AI, which then processes it by making millions of calculations before spitting out its answer.

For example, generative AI could be queried to “make a Covid-like virus that doesn’t show symptoms until at least five days after contraction.” Or “what should go into a vaccine to inoculate against a flu virus that was engineered to last 30 days?” The possibilities for biological warfare are endless, which is why weapons technology companies like Palantir (maker of Gaza-tested Lavender AI) is studying how to use AI to create and mitigate biological threats. Los Alamos Labs is teaming up with Open AI to do the same.

According to the SCSP, MedShield is necessary in order to keep ahead of China in the AI arms race. Here’s how the SCSP explains Medshield:What is MedShield? Imagine a system that could protect us from dangerous pathogens and bioweapons as effectively as our military would defend against inbound ballistic missiles (NORAD) or nuclear attacks (STRATCOM). That’s the idea behind MedShield. This potential national technology program is a bold, fully-integrated, AI-enabled system-of-systems that could neutralize a biological threat, whether from a state, non-state actor, or nature (biological threats go beyond pathogens into five basic types). MedShield would create one holistic “kill chain” against biological threats.

It is a discomfiting notion that protocols would be implemented that would connect our national healthcare department to the Pentagon, and would be modeled after the NORAD and STRATCOM war commands. Could a more sinister, inappropriate framing exist for the office charged with the well-being of our women, men and children? I think not.

Because all generative AI — not only MedShield — requires a surveillance infrastructure of continual data extraction. All that data could come from anywhere, both legally or, as is more often the case, surreptitiously from everyday citizens, indigenous peoples, prisoners, marginalized communities, or the Global South. The human-rights threats of an unregulated, comprehensive, AI-driven federal government would make the East German Stasi look like Munchkinland.

Essentially, Elon Musk is rejiggering all of America’s society and economy to recalibrate itself around AI. We are expected to give up our land, water and dreams of a livable climate in order to make the data centers operational. We are expected to let our government transform into a data extraction apparatus. AI runs on energy, but it lives on data. It can’t live without extracting our data. Our role in society is being reduced to “data resource.” A commodity. If we serve AI, as is the plan, we are not citizens but slaves.

The endless war agenda of the Democrats will be allowed to wind down by Silicon Valley because they can make just as much money, if not more, installing infrastructures for domestic terror instead. Unlike the legacy warmongers like Lockheed and Raytheon, Elon Musk and his digital cabal don’t care who or what they destroy. Everything we are is fodder for their profit machine.

The only answer is to return to an embodied existence, offline. Ditch the digital. Resist smart anything. Oppose the AI-ification of government.

Weatherwatch: Could small nuclear reactors help curb extreme weather? There’s a credibility gap.

As natural disasters make need to cut CO2 emissions clearer than ever, energy demand of AI systems is about to soar.

Paul Brown 27 Jan 25 – https://www.theguardian.com/world/2025/jan/17/weatherwatch-could-small-nuclear-reactors-help-curb-extreme-weather

Violent weather events have been top of the news agenda for weeks, with scientists and fact-based news organisations attributing their increased severity to climate breakdown. The scientists consulted have all emphasised the need to cut greenhouse gas emissions.

At the same time there are predictions about artificial intelligence and datacentres urgently needing vast amounts of new electricity sources to keep them running. Small modular nuclear reactors (SMRs) have been touted as the green solution. The reports suggest that SMRs are just around the corner and will be up and running in the 2030s. Google first ordered seven, followed by Amazon, Microsoft and Meta each ordering more.

With billions of dollars on offer, many startup and established nuclear companies are getting in on the act. More than 90 separate designs for SMRs are being marketed across the world. Many governments, including the UK, are pouring money into design competitions and other ways to incentivise development.

In all this there is a credibility gap. None of the reactor designs have left the drawing board, prototypes have not been built or safety checks begun, and costs are at best optimistic guesses. SMRs may succeed, but let big tech gamble their spare billions on them while the rest of us are building cheap renewables we know work.

In Flamanville, EPR vibrations weigh down EDF

Blast 15th Jan 2025

https://www.blast-info.fr/articles/2025/a-flamanville-les-vibrations-de-lepr-plombent-edf-27fa5zyHQ6mpDzOKgti6kw

Last week, Luc Rémont, CEO of EDF received a worrying report from the engineers working on the Flamanville EPR. It reveals a recurring problem of excessive vibrations. And indicates that he does not know whether the EPR will be able to operate at full power. Revelations.

At EDF, troubles are flying in squadrons. This Tuesday, January 14, the Court of Auditors published a new report on the Flamanville EPR . The venerable institution on Rue Cambon (Paris) now estimates the final cost of the project at 23.7 billion euros. An amount that is significantly higher than the previous assessment made by the Court in 2020: 19.1 billion.

Kicking the donkey, the report specifies that “the calculations made by the Court result in a mediocre profitability for Flamanville 3” : the tiny margin that EDF could generate will not be enough to repay the cost of the loans! For that to happen, the EPR must one day operate at full power. And of that, even the EDF teams are no longer really convinced.

The scene takes place at a dinner party in Paris late last week. “We were in a meeting in the CEO’s office and everything was going well. But then he received a report from Flamanville and the atmosphere suddenly cooled,” says a senior executive of the electrician present at the meeting. If Luc Rémont, the CEO, did not fall off his seat when he read the report, he came close.

The cause? The engineers working on the reactor’s start-up have a doubt. And a big one. “They don’t know if the EPR will be able to operate at full power,” says this senior executive.

This question, which exists among many employees who worked on the nightmarish reactor construction site (twelve years behind schedule), is now shared by the teams who took charge of the reactor. And it is based on an observation: contrary to what EDF’s communication claims, the vibration problems affecting the primary circuit of the reactor are far from being resolved. “The report confirms that there are still problems with excessive vibrations,” says the decidedly very talkative manager.

At the meeting of the local information committee for the Flamanville nuclear power plant in April 2014, held a few days before the ASN authorised EDF to install nuclear fuel in the tank, the electrician had nevertheless brushed aside the issue of vibrations, stating, clearly a little too quickly, that everything was sorted .

But already in the floors of the general management in Paris, the knives are sharpening and the hunt for the culprit is open. Who will wear the hat? One name is on everyone’s mind: that of Alain Morvan , the director of the EPR project until last October, accused in veiled terms of having hidden too much dust under the carpet.

Contacted by email on Tuesday 14 January late in the morning, EDF indicated that it was sticking to its construction cost of 13.2 billion (excluding interim interest). But it refused to comment on our information on the vibrations. Questioned the same day also by email, the Nuclear Safety and Radiation Protection Authority (ASNR), resulting from the merger of the ASN with the IRSN, did not respond to us.

UK will explore nuclear power for new AI data centre plan

The UK is planning special districts for constructing data centers and

will explore dedicating nuclear energy to the sites as part of a Labour

government project to boost technology growth and the ecosystem for

artificial intelligence. These “AI Growth Zones” will include enhanced

access to electricity and easier planning approvals for data centers, the

government said on Sunday. It said the first such zone will be in Culham,

home of the UK Atomic Energy Authority.

Bloomberg 12th Jan 2025,

https://www.bloomberg.com/news/articles/2025-01-12/uk-will-explore-nuclear-power-for-new-ai-data-center-plan

While Los Angeles burns, AI fans the flames

Artificial intelligence is a water-guzzling industry hastening future climate crises from California’s own backyard.

By Schuyler Mitchell , Truthout, January 11, 2025

“………………………………………………. Trump’s latest smear campaign is little more than political football. But the renewed attention on California’s water does highlight ongoing tensions over the conservation and management of this finite resource. As the climate crisis worsens, it’s expected to exacerbate heat waves and droughts, bringing water shortages and increasingly devastating fires like those currently scorching southern California. The situation in Los Angeles is already a catastrophe. Climate change-induced water shortages will make imminent disasters even worse.

In the face of this grim reality, it’s worth revisiting one of the major water-guzzling industries that’s hastening future crises from California’s own backyard: artificial intelligence (AI).

Silicon Valley is the epicenter of the global AI boom, and hundreds of Bay Area tech companies are investing in AI development. Meanwhile, in the southern region of the state, real estate developers are rushing to build new data centers to accommodate expanded cloud computing and AI technologies. The Los Angeles Times reported in September that data center construction in Los Angeles County had reached “extraordinary levels,” increasing more than sevenfold in two years.

This technology’s environmental footprint is tremendous. AI requires massive amounts of electrical power to support its activities and millions of gallons of water to cool its data centers. One study predicts that, within the next five years, AI-driven data centers could produce enough air pollution to surpass the emissions of all cars in California.

Data centers on their own are water-intensive; California is home to at least 239. One study shows that a large data center can consume up to 5 million gallons of water per day, or as much as a town of 50,000 people. In The Dalles, Oregon, a local paper found that a Google data center used over a quarter of the city’s water. Artificial intelligence is even more thirsty: Reporting by The Washington Post found that Meta used 22 million liters of water simply training its open source AI model, and UC Riverside researchers have calculated that, in just two years, global AI use could require four to six times as much water as the entire nation of Denmark.

Many U.S. data centers are based in the western portion of the country, including California, where wind and solar power is more plentiful — and where water is already scarce. In 2022, a researcher at Virginia Tech estimated that about one-fifth of data centers in the U.S. draw water from “moderately to highly stressed watersheds.”

According to the Fifth National Climate Assessment, the U.S. government’s leading report on climate change, California is among the top five states suffering economic impacts from climate crisis-induced natural disaster. California already is dealing with the effects of one water-heavy industry; the Central Valley, which feeds the whole country, is one of the world’s most productive agricultural regions, and the Central Valley aquifer ranks as one of the most stressed aquifers in the world. ClimateCheck, a website that uses climate models to predict properties’ natural disaster threat levels, says that California ranks number two in the country for drought risk.

In August 2021, the U.S. Bureau of Reclamation declared the first-ever water shortage on the Colorado River, which supplies water to California — including roughly a third of southern California’s urban water supply — as well as six other states, 30 tribal nations and Mexico. The Colorado River water allotments have been highly contested for more than a century, but the worsening climate crisis has thrown the fraught agreements into sharp relief. Last year, California, Nevada and Arizona agreed to long-term cuts to their shares of the river’s water supply.

Despite the precarity of the water supply, southern California’s Imperial Valley, which holds the rights to 3.1 million acres of Colorado River water, is actively seeking to recruit data centers to the region.

“Imperial Valley is a relatively untapped opportunity for the data center industry,” states a page on the Imperial Valley Economic Development Corporation’s website. “With the lowest energy rates in the state, abundant and inexpensive Colorado River water resources, low-cost land, fiber connectivity and low risk for natural disasters, the Imperial Valley is assuredly an ideal location.” A company called CalEthos is currently building a 315 acre data center in the Imperial Valley, which it says will be powered by clean energy and an “efficient” cooling system that will use partially recirculated water. In the bordering state of Arizona, Meta’s Mesa data center also draws from the dwindling Colorado River.

The climate crisis is here, but organizers are not succumbing to nihilism. Across the country, community groups have fought back against big tech companies and their data centers, citing the devastating environmental impacts. And there’s evidence that local pushback can work. In the small towns of Peculiar, Missouri, and Chesterton, Indiana, community campaigns have halted companies’ data center plans.

“The data center industry is in growth mode,” Jon Reigel, who was involved in the Chesterton fight, told The Washington Post in October. “And every place they try to put one, there’s probably going to be resistance. The more places they put them the more resistance will spread.” https://truthout.org/articles/while-los-angeles-burns-ai-fans-the-flames/?utm_source=Truthout&utm_campaign=3634e1951f-EMAIL_CAMPAIGN_2025_01_11_08_34&utm_medium=email&utm_term=0_bbb541a1db-3634e1951f-650192793

Lawsuit challenges NRC on SMR regulation

Friday, 10 January 2025, https://www.world-nuclear-news.org/articles/lawsuit-challenges-nrc-on-smr-regulation

The States of Texas and Utah and microreactor developer Last Energy Inc are challenging the US regulator over its application of a rule it adopted in 1956 to small modular reactors and research and test reactors.

Under the US Nuclear Regulatory Commission (NRC) Utilization Facility Rule, all US reactors are required to obtain NRC construction and operating licences regardless of their size, the amount of nuclear material they use or the risks associated with their operation. The plaintiffs say this imposes “complicated, costly, and time-intensive requirements that even the smallest and safest SMRs and microreactors – down to those not strong enough to power an LED lightbulb” must satisfy to secure the necessary licences. This does not only affect microreactors: existing research and test reactors such as those at the universities in both Texas and Utah face “significant costs” to maintain their NRC operating licences, the plaintiffs say.

In the filing, Last Energy – developer of the PWR-20 microreactor – says it has invested “tens of millions of dollars” in developing small nuclear reactor technology, including USD2 million on manufacturing efforts in Texas alone, and has agreements to develop more than 50 nuclear reactor facilities across Europe. But although it has a “preference” to build in the USA, “Last Energy nonetheless has concluded it is only feasible to develop its projects abroad in order to access alternative regulatory frameworks that incorporate a de minimis standard for nuclear power permitting”.

Noting that only three new commercial reactors have been built in the USA over the past 28 years, the plaintiffs say building a new commercial reactor of any size in the country has become “virtually impossible” due to the rule, which it says is a “misreading” of the NRC’s own scope of authority.

They are asking the court to set aside the rule, “at least as applied to certain small, non-hazardous reactors”, and exempt their research reactors and Last Energy’s small modular reactors (SMRs) from the commission’s licensing requirements.

Houston, Texas-based law firm King & Spalding said the lawsuit, if it is successful, would “mark a turning point” in the US nuclear regulatory framework – but warns that it could also create greater uncertainty as advanced nuclear technologies get closer to commercial readiness.

“Regardless the outcome, the Plaintiffs’ lawsuit highlights the challenges in applying the Utilization Facility Rule to the advanced nuclear reactors now under development in the US,” the company said in in analysis released on 9 January.

But the NRC is already addressing the issue: in 2023, it began the rulemaking process to establish an optional technology-inclusive regulatory framework for new commercial advanced nuclear reactors, which would include risk-informed and performance-based methods “flexible and practicable for application to a variety of advanced reactor technologies”. SECY-23-0021: Proposed Rule: Risk-Informed, Technology-Inclusive Regulatory Framework for Advanced Reactors is currently open for public comment until 28 February, and the NRC has said it expects to issue a final rule “no later than the end of 2027”.

The lawsuit has been filed with the US District Court in the Eastern District of Texas.

Deep Fission to supply Endeavour data centers with 2GW of nuclear energy from “mile-deep” SMR

The first reactors are expected to come online in 2029

DCD, January 07, 2025 By Zachary Skidmore

Deep Fission, a small modular nuclear reactor (SMR) developer, has partnered with Endeavour Energy, a US sustainable infrastructure developer, to develop and deploy its technology at scale.

As per the agreement, the partners have committed to co-developing 2GW of nuclear energy to supply Endeavour’s global portfolio of data centers which operate under the Endeavour Edged brand. The first reactors are expected to be operational by 2029.

The Deep Fission Borehole Reactor 1 (DFBR-1) is a pressurized water reactor (PWR) that produces 15MWt (thermal) and 5MWe (electric) and has an estimated fuel cycle of between ten to 20 years…………………………………

Deep Fission plans to release white papers throughout the regulatory approval process for discussion direction on key issues surrounding the SMR………………………………..

Based in Berkley, California, Deep Fission was founded in 2023. In August last year, it announced a $4 million pre-seed funding round to accelerate efforts in hiring, regulatory approval, and the commercialization of its SMR.

Edged, Endeavour’s data center arm, will be the primary beneficiary of the power produced by DFBR-1.

The company, which was formed in 2021, has data centers across the US and the Iberian peninsula, with facilities in operation or development in Madrid, Barcelona, Lisbon, and across the US, including Missouri, Arizona, Texas, Georgia, Iowa, Ohio, and Illinois.

The company specializes in data centers built for high-density artificial intelligence, which utilize a waterless cooling system…………………….. https://www.datacenterdynamics.com/en/news/deep-fission-to-supply-endeavour-data-centers-with-2gw-of-nuclear-energy-from-mile-deep-smr/

Nuclear energy groups race to develop ‘microreactors’

Companies vie to create small plants for deployment to sites from data centres to oil platforms

Ft.com Malcolm Moore and George Steer in London, 9 Jan 25

Nuclear energy companies are trying to shrink reactors to the size of shipping containers in a bid to compete with electric batteries as a source of zero-carbon energy. Led by Westinghouse, the race to develop “microreactors” is based on the notion they can replace diesel and gas generators used by everything from data centres to remote off-grid communities to offshore oil and gas platforms.

Microreactors have a much smaller output of up to 20MW, enough to power roughly 20,000 homes, and are likely to operate like large batteries, with no control room or workers on site. The reactors would be transported to a site, plugged in and left to run for several years before being taken back to their manufacturer for refuelling. Westinghouse in December won approval from US nuclear regulators for a control system that will eventually allow the 8MW eVinci to be operated remotely. The reactor, which has minimal moving parts, uses pipes filled with liquid sodium to draw heat from its nuclear fuel and transfer it to the surrounding air, which can then run a turbine to produce electricity or be pumped into heating systems.

“Our goal is to be able to operate autonomously from a central location where we can just simply monitor a fleet of reactors that are deployed around the world,” said Ball…………………………………………

Ball said two of the target markets for eVinci reactors were data centres and the oil and gas industry, both on and offshore. He said the ability to run several microreactors side by side would make data centres more resilient than with a single source of energy.

…………………………………………………………………. But J Clay Sell, chief executive of X-energy, said the market for microreactors was “still emerging”. “We’ve probably invested as much as anyone in the sector,” he said. “But when you go down in size, the economics become much more challenged. You have to get to a greater level of scale for microreactors to become economic.”

………………………………………. there are questions over how to build, transport and run microreactors safely, said Ronan Tanguy, programme lead for safety and licensing at the World Nuclear Association. Regulators still have to draw up rules around whether microreactors can be operated remotely and how to make them safe from cyber attacks. Rules are also needed around transporting them, especially across national borders, and whether they should be fuelled in a factory or on site. Given their smaller size, they may also pose an easier target for nuclear fuel theft…………………. https://www.ft.com/content/a4c98cb2-797a-4943-9643-2fd75accfd59

Could AI soon make dozens of billion-dollar nuclear stealth attack submarines more expensive and obsolete?

By Wayne Williams, 5 Jan 25, https://www.techradar.com/pro/could-ai-soon-make-dozens-of-billion-dollar-nuclear-stealth-attack-submarines-more-expensive-and-obsolete

Artificial intelligence can detect undersea movement better than humans.

AI can process far more data from a far more sensors than human operators can ever achieve

But the game of cat-and-mouse means that countermeasures do exist to confuse AI

Increase in compute performance and ubiquity of always-on passive sensors need also be accounted for.

The rise of AI is set to reduce the effectiveness of nuclear stealth attack submarines.

These advanced billion-dollar subs, designed to operate undetected in hostile waters, have long been at the forefront of naval defense. However, AI-driven advancements in sensor technology and data analysis are threatening their covert capabilities, potentially rendering them less effective.

An article by Foreign Policy and IEEE Spectrum now claims AI systems can process vast amounts of data from distributed sensor networks, far surpassing the capabilities of human operators. Quantum sensors, underwater surveillance arrays, and satellite-based imaging now collect detailed environmental data, while AI algorithms can identify even subtle anomalies, such as disturbances caused by submarines. Unlike human analysts, who might overlook minor patterns, AI excels at spotting these tiny shifts, increasing the effectiveness of detection systems.

Game of cat-and-mouse

AI’s increasing role could challenge the stealth of submarines like those in the Virginia-class, which rely on sophisticated engineering to minimize their detectable signatures.

Noise-dampening tiles, vibration-reducing materials, and pump-jet propulsors are designed to evade detection, but AI-enabled networks are increasingly adept at overcoming these methods. The ubiquity of passive sensors and continuous improvements in computational performance are increasing the reach and resolution of these detection systems, creating an environment of heightened transparency in the oceans.

Despite these advances, the game of cat-and-mouse persists, as countermeasures are, inevitably, being developed to outwit AI detection.

These tactics, as explored in the Foreign Policy and IEEE Spectrum piece, include noise-camouflaging techniques that mimic natural marine sounds, deploying uncrewed underwater vehicles (UUVs) to create diversions, and even cyberattacks aimed at corrupting the integrity of AI algorithms. Such methods seek to confuse and overwhelm AI systems, maintaining an edge in undersea warfare.

As AI technology evolves, nations will need to weigh up the escalating costs of nuclear stealth submarines against the potential for their obsolescence. Countermeasures may provide temporary degree of relief, but the increasing prevalence of passive sensors and AI-driven analysis suggests that traditional submarine stealth is likely to face diminishing returns in the long term.

The Quiet Crisis Above: Unveiling the Dark Side of Space Militarization

By Justin James McShane, GeopoliticsUnplugged, Nov 28, 2024

Summary:

In this episode, we examine the growing militarization of space, focusing on the development and testing of anti-satellite weapons (ASATs) by various nations, including the U.S., Russia, China, and India. We detail the history of space militarization, from the Cold War to the present, highlighting the dangers of space debris and the inadequacy of existing treaties like the Outer Space Treaty in addressing modern threats. Different types of ASATs are described, both kinetic and non-kinetic, along with electronic warfare systems used for disrupting satellites. We also discuss the lack of international cooperation and robust enforcement mechanisms to prevent an arms race in space, emphasizing the need for new agreements to ensure the peaceful use of outer space. Ultimately, we warn of the potential for space to become a new theater of conflict. cooperation?”………………………………………………….

https://geopoliticsunplugged.substack.com/p/ep93-the-quiet-crisis-above-unveiling-ed9

Departing Air Force Secretary Will Leave Space Weaponry as a Legacy

msn, by Eric Lipton, 30 Dec 24

WASHINGTON — Weapons in space. Fighter jets powered by artificial intelligence.

As the Biden administration comes to a close, one of its legacies will be kicking off the transformation of the nearly 80-year-old U.S. Air Force under the orchestration of its secretary, Frank Kendall.

When he leaves office in January — after more than five decades at the Defense Department and as a military contractor, including nearly four years as Air Force secretary — Mr. Kendall, 75, will have set the stage for a transition that is not only changing how the Air Force is organized but how global wars will be fought.

One of the biggest elements of this shift is the move by the United States to prepare for potential space conflict with Russia, China or some other nation.

In a way, space has been a military zone since the Germans first reached it in 1944 with their V2 rockets that left the earth’s atmosphere before they rained down on London, causing hundreds of deaths. Now, at Mr. Kendall’s direction, the United States is preparing to take that concept to a new level by deploying space-based weapons that can disable or disrupt the growing fleet of Chinese or Russian military satellites………………………

Perhaps of equal significance is the Air Force’s shift under Mr. Kendall to rapidly acquire a new type of fighter jet: a missile-carrying robot that in some cases could make kill decisions without human approval of each individual strike.

In short, artificial-intelligence-enhanced fighter jets and space-based warfare are not just ideas in some science fiction movie. Before the end of this decade, both are slated to be an operational part of the Air Force because of choices Mr. Kendall made or helped accelerate.

The Pentagon is the largest bureaucracy in the world. But Mr. Kendall has shown, more than most of its senior officials, that it too can be forced to innovate.

“It is big,” said Richard Hallion, a military historian and retired senior Pentagon adviser, describing the change underway at the Air Force. “We have seen the maturation of a diffuse group of technologies that, taken together, have forced a transformation of the American military structure.”

Mr. Kendall is an unusual figure to be the top civilian executive at the Air Force, a job he was appointed to by President Biden in 2021, overseeing a $215 billion budget and 700,000 employees…………….

Mr. Kendall, who has a folksy demeanor more like a college professor than a top military leader, comes at the job in a way that recalls his graduate training as an engineer.

He gets fixated on both the mechanics and the design process of the military systems his teams are building at a cost of billions of dollars. Mr. Kendall and Gen. David Allvin, the department’s top uniformed officer, have called this effort “optimizing the Air Force for great power competition.”………………….

Mr. Kendall has taken these innovations — built out during earlier waves of change at the Air Force — and amped up the focus on autonomy even more through a program called Collaborative Combat Aircraft.

These new missile-carrying robot drones will rely on A.I.-enhanced software that not only allows them to fly on their own but to independently make certain vital mission decisions, such as what route to fly or how best to identify and attack enemy targets.

The plan is to have three or four of these robot drones fly as part of a team run by a human-piloted fighter jet, allowing the less expensive drone to take greater risks, such as flying ahead to attack enemy missile defense systems before Navy ships or piloted aircraft join the assault.

Mr. Kendall, in an earlier interview with The Times, said this kind of device would require society to more broadly accept that individual kill decisions will increasingly be made by robots……………….

These new collaborative combat aircraft — which will cost as much as about $25 million each, compared to the approximately $80 million price for a manned F-35 fighter jet — are being built for the Air Force by two sets of vendors. One group is assembling the first of these new jets while a second is creating the software that allows them to fly autonomously and make key mission decisions on their own.

This is also a major departure for the Air Force, which usually relies on a single prime contractor to do both, and a sign of just how important the software is — the brain that will effectively fly these robotic fighter jets………………………………………

Space is now a fighting zone, Mr. Kendall acknowledged, like the oceans of the earth or battlefields on the ground.

The United States, Russia and China each tested sending missiles into space to destroy satellites starting decades ago, although the United States has since disavowed this kind of weapon because of the destructive debris fields it creates in orbit.

So during his tenure, the Air Force started to build out a suite of what Mr. Kendall called “low-debris-causing weapons” that will be able to disrupt or disable Chinese or other enemy satellites, the first of which is expected to be operational by 2026.

Mr. Kendall and Gen. Chance Saltzman, the chief of Space Operations, would not specify how these American systems will work. But other former Pentagon officials have said they likely will include electronic jamming, cyberattacks, lasers, high-powered microwave systems or even U.S. satellites that can grab or move enemy satellites.

The Space Force, over the last three years, has also been rapidly building out its own new network of low-earth-orbit satellites to make the military gear in space much harder to disable, as there will be hundreds of cheaper, smaller satellites, instead of a few very vulnerable targets.

Mr. Kendall said when he first came into office, there was an understandable aversion to weaponizing space, but that now the debate about “the sanctity or purity of space” is effectively over.

“Space is a vacuum that surrounds Earth,” Mr. Kendall said. “It’s a place that can be used for military advantage and it is being used for that. We can’t just ignore that on some obscure, esoteric principle that says we shouldn’t put weapons in space and maintain it. That’s not logical for me. Not logical at all. The threat is there. It’s a domain we have to be competitive in.” https://www.msn.com/en-us/news/politics/departing-air-force-secretary-will-leave-space-weaponry-as-a-legacy/ar-AA1wE4iS

-

Archives

- January 2026 (227)

- December 2025 (358)

- November 2025 (359)

- October 2025 (377)

- September 2025 (258)

- August 2025 (319)

- July 2025 (230)

- June 2025 (348)

- May 2025 (261)

- April 2025 (305)

- March 2025 (319)

- February 2025 (234)

-

Categories

- 1

- 1 NUCLEAR ISSUES

- business and costs

- climate change

- culture and arts

- ENERGY

- environment

- health

- history

- indigenous issues

- Legal

- marketing of nuclear

- media

- opposition to nuclear

- PERSONAL STORIES

- politics

- politics international

- Religion and ethics

- safety

- secrets,lies and civil liberties

- spinbuster

- technology

- Uranium

- wastes

- weapons and war

- Women

- 2 WORLD

- ACTION

- AFRICA

- Atrocities

- AUSTRALIA

- Christina's notes

- Christina's themes

- culture and arts

- Events

- Fuk 2022

- Fuk 2023

- Fukushima 2017

- Fukushima 2018

- fukushima 2019

- Fukushima 2020

- Fukushima 2021

- general

- global warming

- Humour (God we need it)

- Nuclear

- RARE EARTHS

- Reference

- resources – print

- Resources -audiovicual

- Weekly Newsletter

- World

- World Nuclear

- YouTube

-

RSS

Entries RSS

Comments RSS